When ChatGPT became available to the public in late 2022, few could guess just how quickly the AI chatbot would change the tech industry. But in the months after ChatGPT came online, nearly every tech giant scrambled to position itself as an AI-first company. Well, everyone but Apple. While Microsoft, Meta, Google, and Amazon spoke about embracing artificial intelligence in 2023, Apple remained relatively quiet.

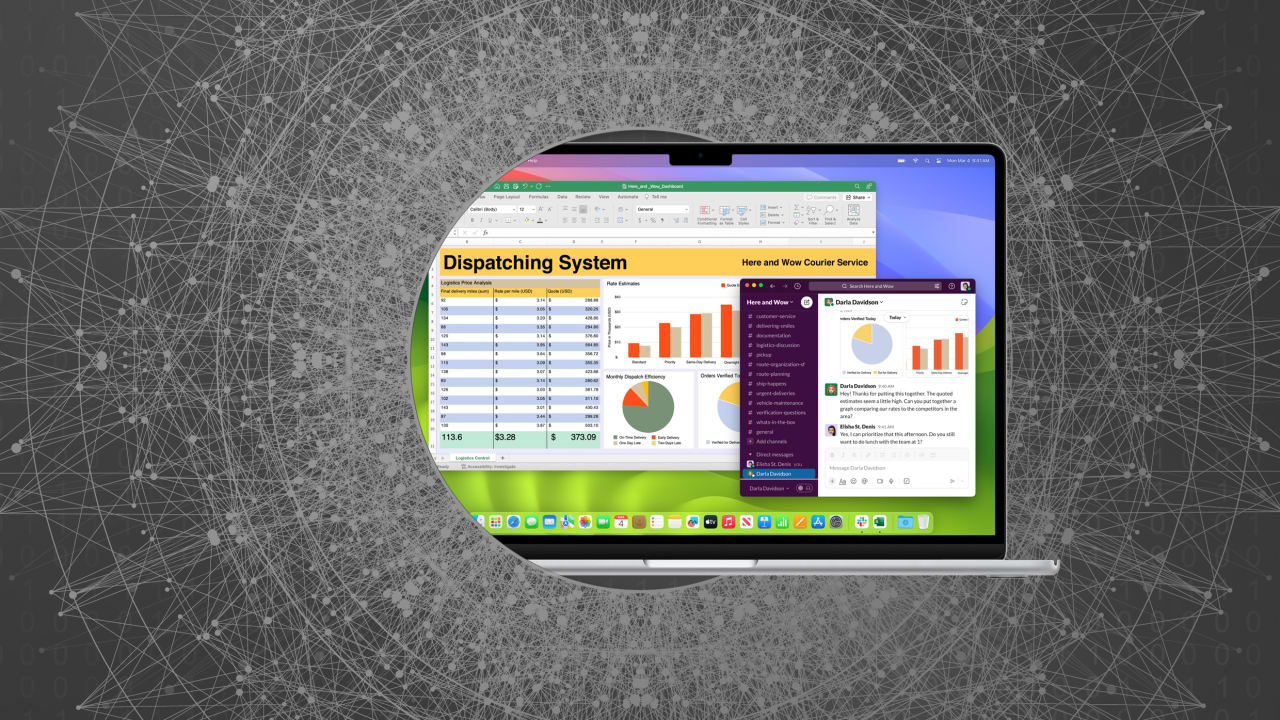

But Apple is setting a new tone regarding AI in 2024, as evidenced by its latest product release earlier this week, the M3 MacBook Air. For the first time, the company is advertising its hardware as an AI-running machine, boasting that the new MacBook is the “world’s best consumer laptop for AI.” Never before has Apple tied its hardware to AI so concretely.

Yet delving deeper into this new marketing narrative, you can see that Apple is relegated to admitting that you must use third-party apps if you want the MacBook Air to be a “laptop for AI.” It mentions that the MacBook Air can run generative AI apps like Microsoft Copilot, CapCut, Pixelmator Pro, Adobe Firefly, and more. Nowhere does it mention its own apps, which lack jaw-dropping generative AI features.

However, this AI narrative is likely to shift this summer when Apple previews its upcoming operating systems, including iOS 18 for iPhone and macOS 15 for the Mac. It is with these releases that Apple is expected to bake in AI features across its apps and services. Instead of just playing catch-up, Apple may be ready to upend consumers’ notions of what they can expect from AI tools, particularly in two specific ways.

What generative AI features will Apple offer?

When it comes to generative AI, the most obvious use case—and the place where it’s needed desperately—is in Apple’s voice assistant, Siri. Once the pacesetter for natural language processing, Siri is now more than a decade old, and in terms of its capabilities and comprehension, it still pales in comparison to other voice assistants that came after it, such as Amazon’s Alexa and Google Assistant. And compared to ChatGPT, Siri feels like it’s from the Stone Age.

But Apple is likely to overhaul Siri for its upcoming software releases by transitioning it to a large language model (LLM) generative AI ChatGPT-like chatbot. Indeed, in summer 2023, Bloomberg reported that Apple was working internally on an LLM chatbot dubbed “AppleGPT.”

An “AppleGPT”-powered Siri, if it performs as well as ChatGPT, could make Apple’s Siri voice assistant useful for the first time in its history by enabling continuous conversations, where Siri would take into account what the user asked previously to generate the most accurate response, and more robust and relevant answers.

Going further, system-wide textual generative AI could be used to summarize documents, emails, and long text messages received on an iPhone and Mac. Imagine someone sending you a 50-page PDF attached to an email. The Mail app could offer a quick summary of what the PDF contains, along with its most salient points.

When it comes to visual generative AI features, Apple’s Photos and iMovie apps are also ripe for an AI update. The possibility that users will soon be able to remove unwanted items from a photo or video with the swipe of a finger, or generatively fill the space just outside the frame of a photo so you can “zoom out” of the image seems almost certain. Apple could also add AI editing capabilities to iMovie: Click a button and iMovie will assemble an edited film based on the multiple video clips you selected.

Of course, these examples are just conjectures based on rumors and the known capabilities of other generative AI tools out there. They’d be nice-to-have features, but not earth-shattering. Other AI tools can already do these things.

Yet there is one groundbreaking innovation Apple has in the works that may make its generative AI technology better than the rest.

Apple’s AI chatbot may live on your device, not in the cloud

Currently, all publicly available AI chatbots, including OpenAI’s ChatGPT and Google’s Gemini, live in the cloud—“the cloud” being powerful remote servers potentially thousands of miles away. So when you give an AI chatbot a command, it’s not computing that command on your PC or smartphone. Instead, it’s sending your query to remote servers that process the request and send the answer back to you.

However, this type of system has two big drawbacks. First, it slows down the time it takes the chatbot to reply, since your data needs to be sent off and analyzed. Second, it sends your data to a remote server—and who knows what the company that owns the chatbot does with it after that. Maybe it deletes it, but maybe it uses it to build up a profile about you, keeping a log of every request you give the AI.

Yet there is a technical reason generative AI chatbots are cloud-based: Generative AI tasks require a ton of processing power, power most computers and phones don’t have (unless, perhaps, you have a MacBook with an M3 chip). Apple’s marketing for the new MacBook Air, as well as a recently published paper by some of its researchers, suggests the company may have found a way to allow an AI chatbot to live directly on your Mac or iPhone. This would be a game changer for the AI industry, from both a speed and user-privacy perspective.

In the December 2023 paper titled “LLM in a flash: Efficient Large Language Model Inference with Limited Memory,” researchers at Apple revealed that they’ve found a way to run large and complicated LLMs locally on a user’s device. If this performs well—and Apple’s MacBook Air marketing leads me to believe Apple knows it does—Apple may be able to run most of its generative AI tools on your own Apple device, not on its servers.

In December, Google introduced its new AI model, Gemini, and the company offers a lite version of it called Gemini Nano that runs on Pixel 8 Pro devices. This lets Pixel 8 Pro carry out simpler AI tasks, such as those that summarize recorded memos, on the device. But Gemini Nano doesn’t have the power to support on-device chatbots or advanced generative AI image creation.

If Apple’s publicly available paper is any indication, the company may be further along than its competitors when it comes to finding ways for our everyday devices to run these complex AI tools locally. “We believe as LLMs continue to grow in size and complexity, approaches like this work will be essential for harnessing their full potential in a wide range of devices and applications,” Apple’s paper states.

And that means that if the new Siri chatbot lives directly on your iPhone or Mac, it doesn’t need to send your request about a health concern to Apple’s servers to analyze and return the answer. And because it doesn’t send your request off to a server, Apple never gets that data about you. Likewise, if you want to use generative AI to expand the borders of a photograph you took, you wouldn’t need to send that photo to Apple’s servers to do the AI heavy lifting—your Mac or iPhone would do it on its own. Apple would never “see” your photo or the alterations you made to it.

If Apple can enter the AI arms race while delivering unrivaled privacy and speed due to its chatbot and other advanced AI tools living on a user’s device, it will give the company a giant leg up over its competitors—something they likely won’t be able to match due to most Android phones and Windows PCs lacking chips that can equal the power of the iPhone’s A17 series or the Mac’s M3 series. It would also be very on-brand, not just for Apple, which touts its privacy stance every chance it gets, but for CEO Tim Cook, who frequently talks about the importance of being best, not first.